IN A NUTSHELL

-

A guide for you to integrate smart AI virtual assistants directly into your Spree/Ruby on Rails store using RAG, moving beyond generic chatbots to provide precise, context-aware product recommendations.

-

How to leverage open-source AI locally, deploying powerful LLMs like Mistral via Ollama and managing product embeddings with Qdrant, all within your development environment.

-

Key AI pain points, demonstrating how Retrieval-Augmented Generation (RAG) directly addresses LLM “hallucinations” and static knowledge bases by dynamically feeding real-time product data, ensuring accurate and up-to-date customer interactions.

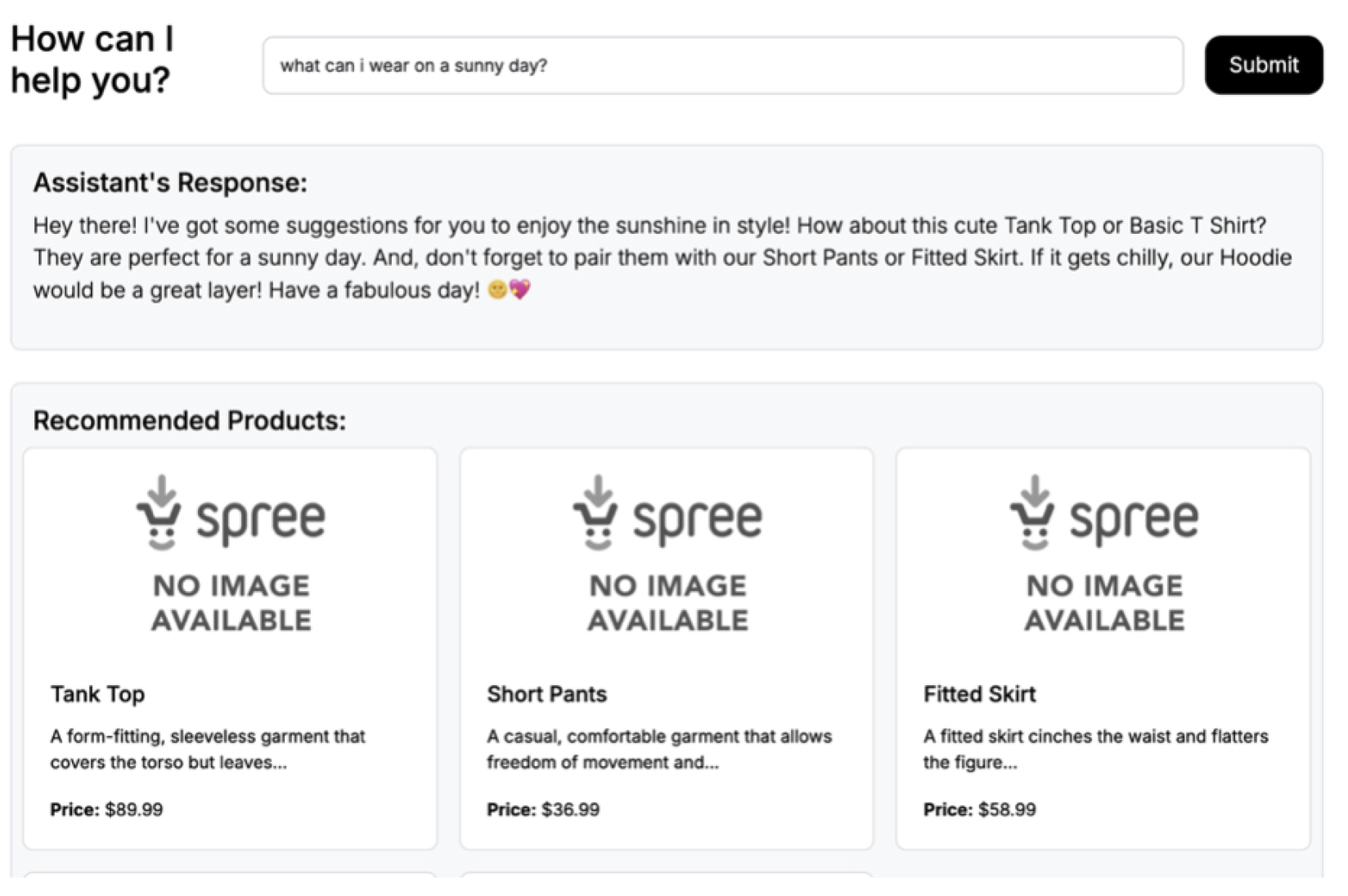

Your customer asks “What can I wear on a sunny day?” Your virtual assistant would say “Hey there! I’ve just got the right clothes so you will be on style on this perfect Sunny day!”

We’ll use Ollama to run the Mistral LLM locally, Langchain.rb to interact with the model, and Qdrant as our vector database to store product embeddings.

Installing Qdrant via Docker, all vector collection data will persist in the qdrantdata folder inside your current directory:

docker pull qdrant / qdrant

docker run - p 6333: 6333\ -

v $(pwd) / qdrant - data: /qdrant/storage\

qdrant / qdrant

Install Ollama which would be our app that manages LLM’s . They provide an installer - https://ollama.com/download/mac

Once Ollama is downloaded, we’ll use Mistral to create the embeddings:

ollama pull mistral

Add the necessary gems to our Gemfile:

# Langchain

for LLM interactions and embeddings

gem 'langchainrb'

# HTTP client

for making API requests

gem 'faraday'

# Vector database client

for storing and querying embeddings

gem 'qdrant-ruby'

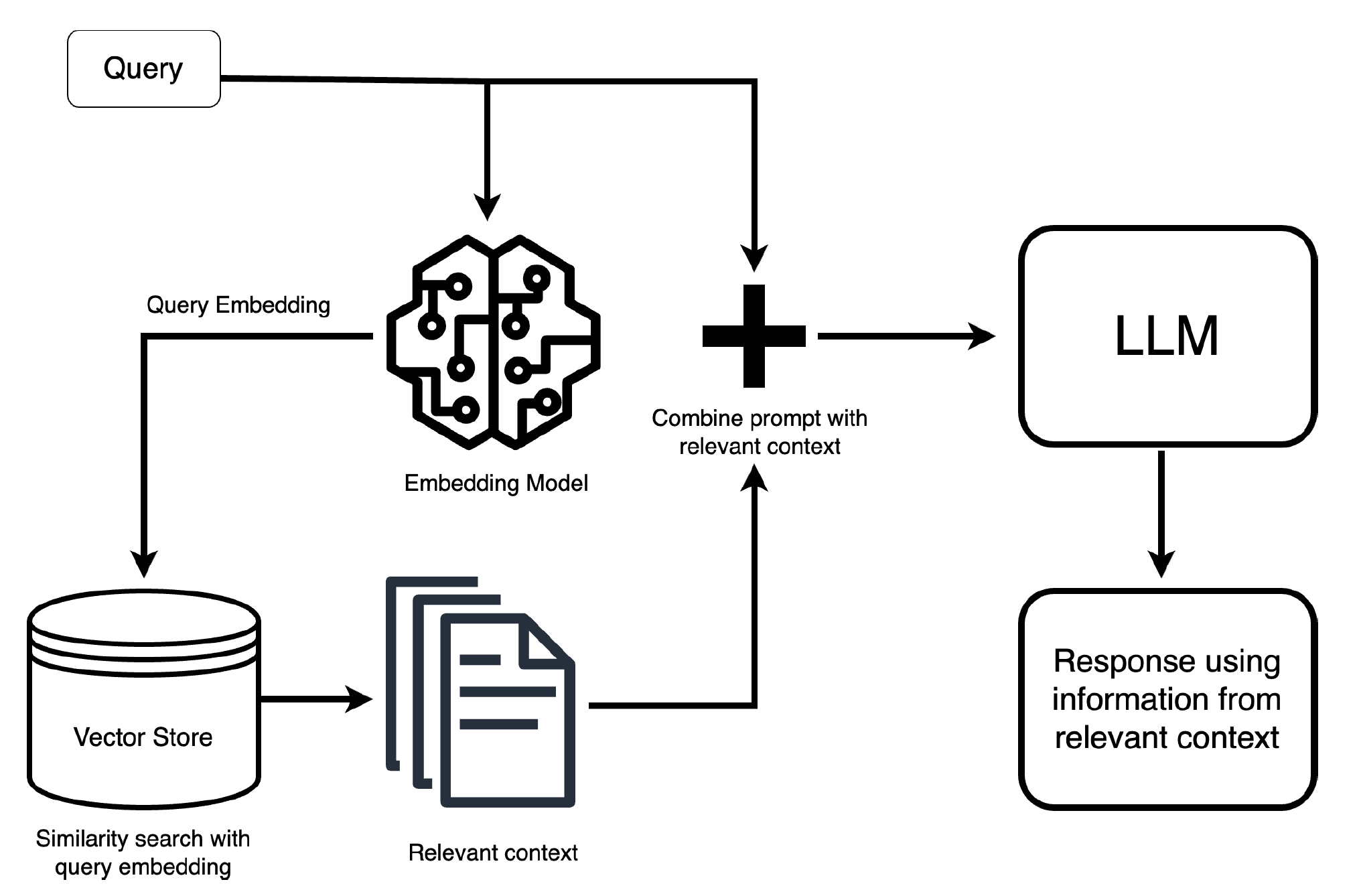

Our flow would look like this.

Source: Clarifai Blog - What is RAG?

A rake task to create our product embeddings:

create:: environment do

llm_client = Llm::Mistral::Client.new

qdrant_client = VectorDb::Qdrant::Client.new

# Ensure the Qdrant collection exists

qdrant_client.search(Array.new(4096, 0))

# Iterate through Spree products and create embeddings

Spree::Product.find_each do | product |

text = prepare_text(product)

embedding = llm_client.embed(text)

payload = {

name: product.name,

description: product.description,

price: product.price,

slug: product.slug

}

response = qdrant_client.search(embedding)

puts "Embedding upserted for Product ID: #{product.id} - Response: #{response}"

end

puts "Embeddings created for all products!"

end

def prepare_text(product)

data = {

id: product.id,

slug: product.slug,

name: product.name || "N/A",

description: product.description || "No description available",

price: product.price || 0.0

}

"Product Name: #{data[:name]} | Description: #{data[:description]} | Price: $# {

'%.2f' % data[: price]

}

"

end

end

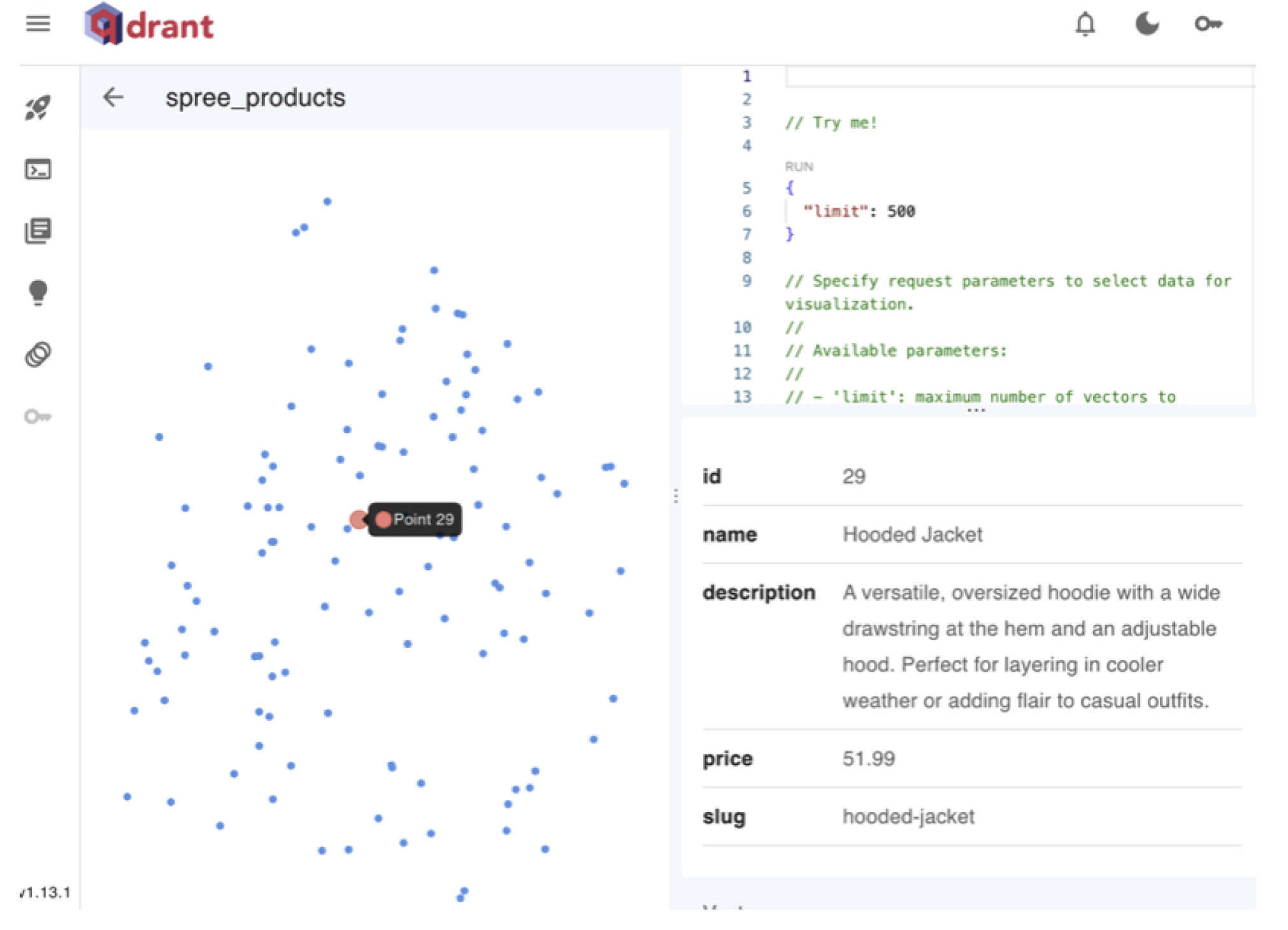

Client wrapper for Qdrant vector database that provides search functionality for Spree products using vector embeddings:

module VectorDb

module Qdrant

class Client

QDRANT_OPTIONS = {

url: 'http://localhost:6333',

api_key: nil,

index_name: 'spree_products'

}.freeze

def initialize(llm = default_llm)

qdrant_options = QDRANT_OPTIONS.merge(llm: )

@client = Langchain::Vectorsearch::Qdrant.new( ** qdrant_options).client

end

def search(vector, limit: 5, with_payload: true)

@client.points.search(

collection_name: QDRANT_OPTIONS[: index_name],

vector: ,

limit: ,

with_payload:

)

end

private

def default_llm

@default_llm ||= Llm::Mistral::Client.new

end

end

end

end

Client for interacting with Mistral LLM through Ollama, providing methods for text embedding and completion.

module Llm

module Mistral

class Client

DEFAULT_OPTIONS = {

completion_model: 'mistral',

embedding_model: 'mistral',

chat_completion_model: 'mistral',

temperature: 0.7

}.freeze

attr_reader: llm

# Constructor to initialize the LLM instance with options

def initialize(options = DEFAULT_OPTIONS)

@llm = Langchain::LLM::Ollama.new(default_options: options)

end

def embed(text)

llm.embed(text: ).embedding

end

def complete(prompt)

llm.complete(prompt: ).raw_response['response']

end

end

end

In summary our rake task does this:

-

Initialise the Mistral and Qdrant client

-

Loops through all products

-

Prepare text for each product

-

Uses Mistral to generate the product embedding

-

Stores the embedding in Qdrant

Let us now create our RagQueryService. It will be responsible to do the following:

-

Validate the query

-

Generates query embedding from llm

-

Vector search

-

Extract products and formats it

-

Generates prompt with product context Responds back with the llm and the product suggestions

class RagQueryService

attr_accessor: query,: llm,: qdrant

def self.call(query)

new(query).perform

end

def initialize(query)

@query = query.strip

@llm = Llm::Mistral::Client.new

@qdrant = VectorDb::Qdrant::Client.new

end

def perform

return {

error: 'Please enter a valid query.'

}

if @query.blank ?

=> e

Rails.logger.error("RAG Query Error: #{e.message}") {

error: 'An error occurred while processing your query. Please try again

later.

' }

end

end

private

def extract_products(results)

results['result'].map do | point | {

name: point['payload']['name'],

description: truncate_description(point['payload']['description']),

price: point['payload']['price'],

image_url: '/assets/noimage/large.png'

# Update this

if dynamic image URLs are

available

}

end

end

def generate_llm_response(products)

formatted_products = products.map do | product |

"- #{product[:name]}: #{product[:description]} (Price: $#{'%.2f' %

product[: price]

})

"

end.join("\n")

prompt = << ~PROMPT

The user asked: "#{@query}"

Based on the user 's query, here are some recommended products from the catalog: #{

formatted_products

}

Respond in a sweet and short conversational tone.

PROMPT

@llm.complete(prompt)

end

def truncate_description(description)

return "No description available"

if description.nil ? || description.strip.empty ?

words = description.split

words.length > 10 ? words[0.. .10].join(' ') + '...' : description

end

end

Following is an example on how you can use this on a Rails controller.

class RagQueryController < ApplicationController

def create

query = params[: query]

result = RagQueryService.call(query)

if result[: error]

render json: {

response: result[: error]

}, status:: unprocessable_entity

else

render json: {

llm_response: result[: llm_response],

products: result[: products]

}, status:: ok

end

end

end

When we receive the customer’s query, we create embeddings for it and pull a match from the Vector Database. We now combine the results from the Vector database and formulate our context for the AI. The AI will compose the message in natural like customer response.

❴

"prompt": "The user asked: \"what can I wear on a sunny day\"\n\nBased on the user's

query,

here are some recommended products from the catalog: \n\ n - Oversize T Shirt

Wrapped On Back: A relaxed,

oversized t - shirt cinched at the back with a...(Price:

$10 .99)\ n - Shined Pants: A stylish,

versatile denim jacket with a reflective...

(Price: $10 .99)\ n - Scrappy Top: Scrappy,

the adorable and energetic French Bulldog

with a distinctive...(Price: $47 .99)\ n - Regular Shirt: A casual,

button - up shirt with

a round or V - neckline and...(Price: $91 .99)\ n - Denim Shirt: A timeless,

comfortable

denim shirt with a classic button - down front...(Price: $11 .99)\ n\ nRespond in a sweet

and short conversational tone.

",

"model": "mistral",

"stream": false,

"options": ❴

"temperature": 0.7

❵

❵

You now have a full Retrieval-Augmented Generation (RAG) setup that:

-

Embeds your Spree product catalog

-

Lets users query in natural language

-

Matches relevant products via vector similarity

-

Generates human-friendly responses from an LLM

Excited about having your own virtual assistant in your online shop? reinteractive is expert in Spree / Solidus based commerce websites and we can help you implement your very own AI powered virtual customer assistant.

References:

-

Step-by-Step Guide to Building LLM Applications with Ruby (Using Langchain and Qdrant)

-

Guide I use to put all this together on top of a Spree-powered website https://mistral.ai/

-

LLM to generate embeddings and provides the suggestion https://qdrant.tech/

-

Vector database https://ollama.com/ - Runs our LLM

Ps. if you have any questions

Ask here